When integrating scripts into your workflows and scheduled jobs, it's essential to be mindful of the performance impacts they may have. Since the user interface (UI) pauses and waits for scripts to finish executing for event-driven workflows, ensuring efficient script performance directly contributes to a positive user experience.

Script Execution and UI Responsiveness

Scripts attached to event triggers execute synchronously, meaning the UI remains unresponsive until these scripts complete their tasks. This blocking behavior emphasizes the necessity of keeping scripts concise and optimized. Long-running or inefficient scripts can significantly degrade performance, causing noticeable delays and negatively affecting the user's perception of system responsiveness.

Execution Time Limit

To protect overall system performance, the platform enforces a 60-second execution limit on scripts. Scripts exceeding this duration are automatically terminated. This limitation is intentional, aiming to prevent scripts from inadvertently creating prolonged UI lock-ups and ensuring resources remain available for all users.

Customer Responsibility for Script Optimization

Performance and optimization of scripts are primarily in the hands of the customer developing these scripts. While our platform provides the tools and environment for script execution, the efficiency of scripts themselves cannot be automatically optimized or corrected by the platform. Customers should proactively optimize their scripts to maintain efficient and smooth performance.

A general rule of thumb is to strive to keep individual scripts and execution chains where multiple scripts trigger off of the same event down to just a few seconds whenever possible. It's also important to be mindful that any GET, ADD, SET, or REMOVE command issued in a script incurs some computational cost, typically milliseconds for most actions, but these can add up significantly when multiple operations are executed, particularly within loops. This proactive approach helps maintain user experience and reduces the likelihood of timeouts.

Best Practices for Script Optimization

Minimize Commands: Reduce the number of system commands that initiate CRUD operations within each script. Consolidate requests when possible to limit overhead.

Efficient Data Handling: Only retrieve and process the data necessary for the current task. Avoid overly broad queries or large dataset processing within scripts.

Caching and Reuse: Utilize caching mechanisms or store intermediate results to avoid redundant processing in frequently executed scripts. For simple in-system caching, leverage custom objects as a key-value store, saving compressed JSON structures that are easily queried and decompressed back into PowerShell objects. For fast out-of-system caching/storage, you could leverage cloud-based blob storage services such as Azure storage, Amazon S3, or Redis for high-performance scenarios.

Error Handling and Logging: Implement efficient error handling and minimal logging necessary to troubleshoot issues without unnecessarily prolonging script execution. Avoid excessive logging with the

Write-Outputcommand, as each call introduces a small amount of additional overhead and increases storage per execution.

Monitoring, Reporting, and Feedback

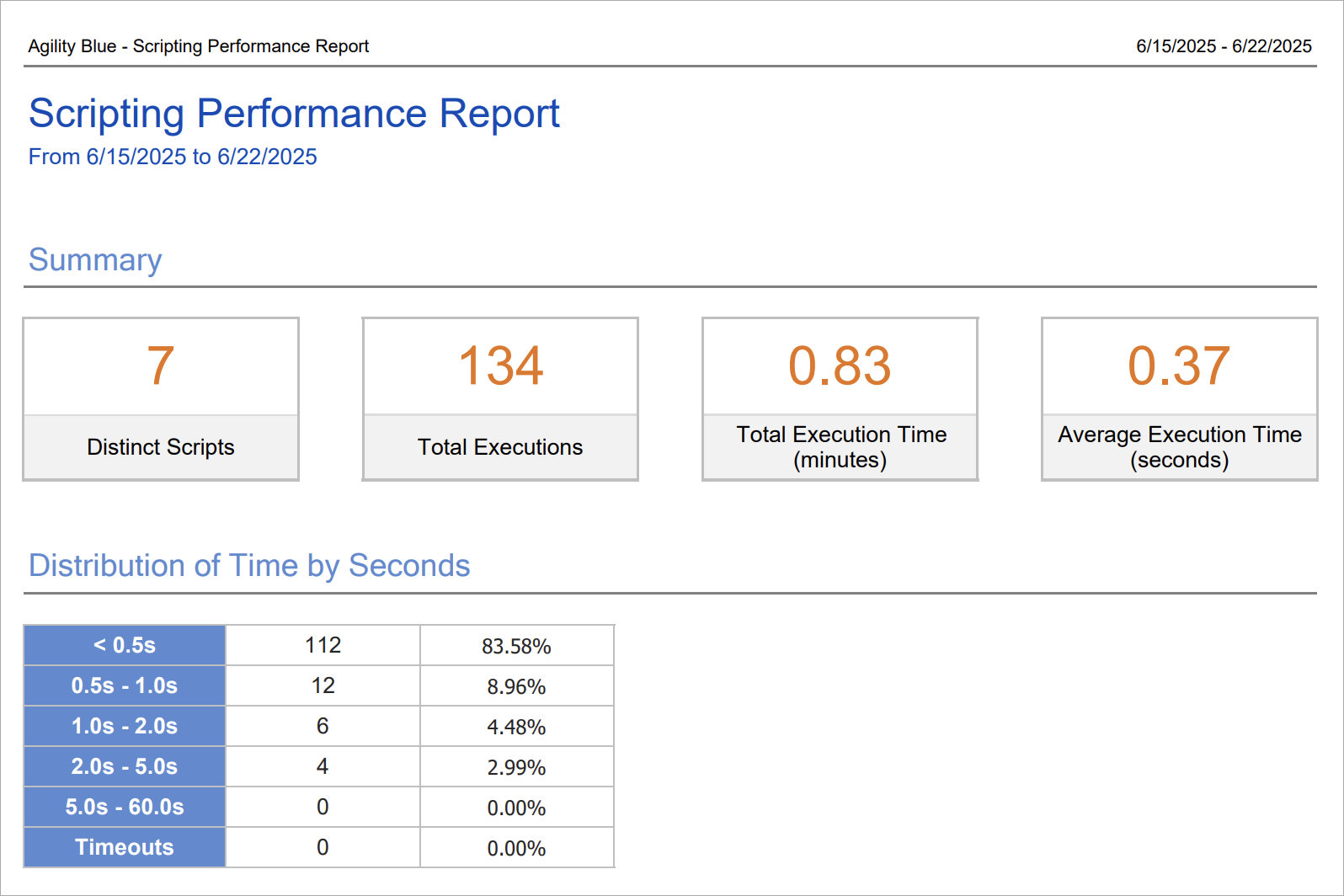

Regularly monitor script performance through available metrics and logs. Agility Blue provides a scripting performance report that can be ran against a specific time period to analyze total execution time per script, identify when scripts execute throughout the day, assess distribution of execution times, and detect timeouts. Establish performance benchmarks and periodically review script execution times. User feedback is invaluable—regularly solicit input from end users to identify scripts causing performance concerns or disruptions.

Strategies for Handling Intensive Script Processing

If you notice scripts are frequently taking longer than ideal, it's essential to review and optimize their logic:

Assess Complexity: Review your script logic to determine computational complexity using standard big-O notation (O(f(n))). Identify inefficient loops or repeated operations. For example, if your script repeatedly retrieves data and updates it within a loop, consider retrieving the entire dataset upfront and performing batch updates to reduce overhead or making sure you short-circuit loops when there is no more need to run the loop.

Scheduled Batch Updates: Determine if immediate data updates are critical. If updates can be delayed without impacting business processes, consider switching to a scheduled, job-based approach. Leveraging custom queues can efficiently batch process tasks, spreading the load and improving overall performance.

Offload Heavy Processing: For scripts involving intensive computation or heavy data processing, consider offloading work to external processes, such as Azure Functions or AWS Lambdas. This approach promotes a "fire-and-forget" model, immediately returning control to the user interface while the external service handles the processing asynchronously.

Balancing Functionality and Performance

Scripts offer powerful opportunities for customization and workflow automation but come with inherent trade-offs. Continuously evaluate scripts to strike the right balance between achieving business requirements and maintaining optimal system responsiveness and user satisfaction.